Hung-Jui Guo, Hiranya Garbha Kumar and Balakrishnan Prabhakaran

Submitted to: ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM), currently under review.

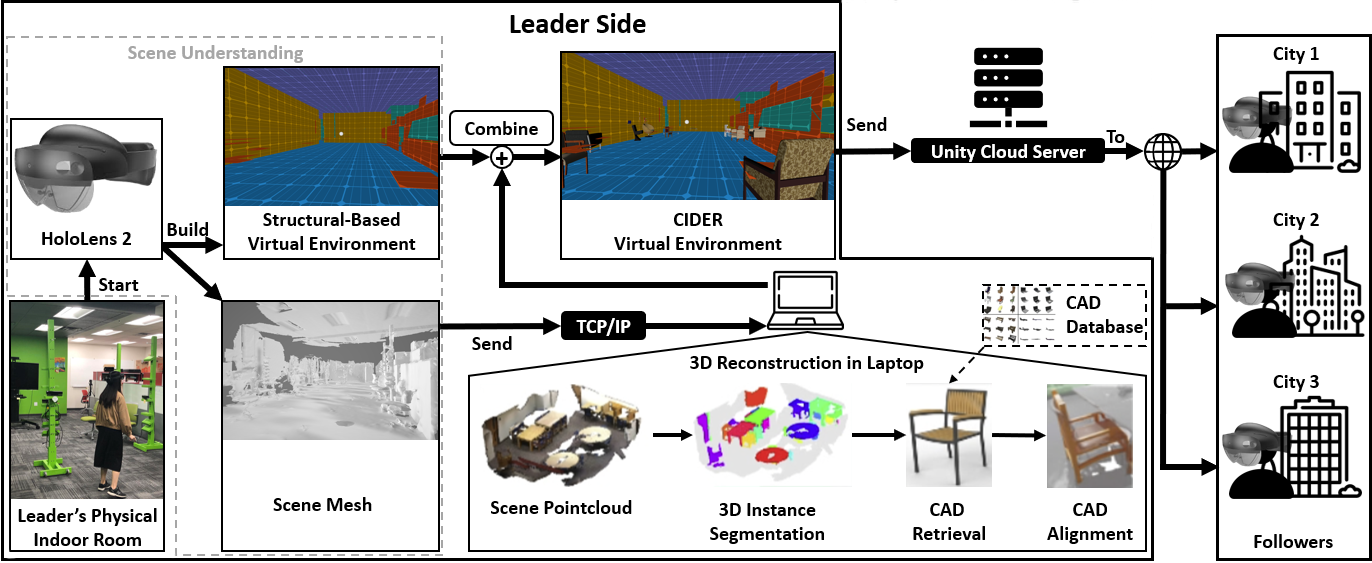

Mixed Reality system designed for long distance collaboration with Leader-Follower paradigm, integrating low-latency, dynamic, real-time 3D environment reconstruction of real-world scenes supporting object interactions in both real and virtual worlds.

Hung-Jui Guo, Laura R. Marusich, Jonathan Z. Bakdash, Shulan Lu, Andrew M. Tague, Reynolds, J. Ballotti, Derek Harter, Omeed Eshaghi Ashtiani and Balakrishnan Prabhakaran

Submitted to: IEEE International Conference on Multimedia & Expo (ICME) 2025, currently under review.

The current research reported a behavioral experiment with 59 participants that manipulated the color, luminance, and rendered distance of virtual objects in a Hololens 2 MR environment. The experiment examined the effects of these three variables on the human depth perception of the virtual objects. Results indicated that objects with cool colors (green and blue) tended to be perceived closer than objects with warm colors (red and yellow). Objects with high luminance tended to be perceived closer than low luminance ones. This is a unique result compared to previous related MR works.

Hung-Jui Guo, Hiranya Garbha Kumar, Minhas Kamal and Balakrishnan Prabhakaran

Published at: Proceedings of the 32nd ACM International Conference on Multimedia (ACM MM'24).

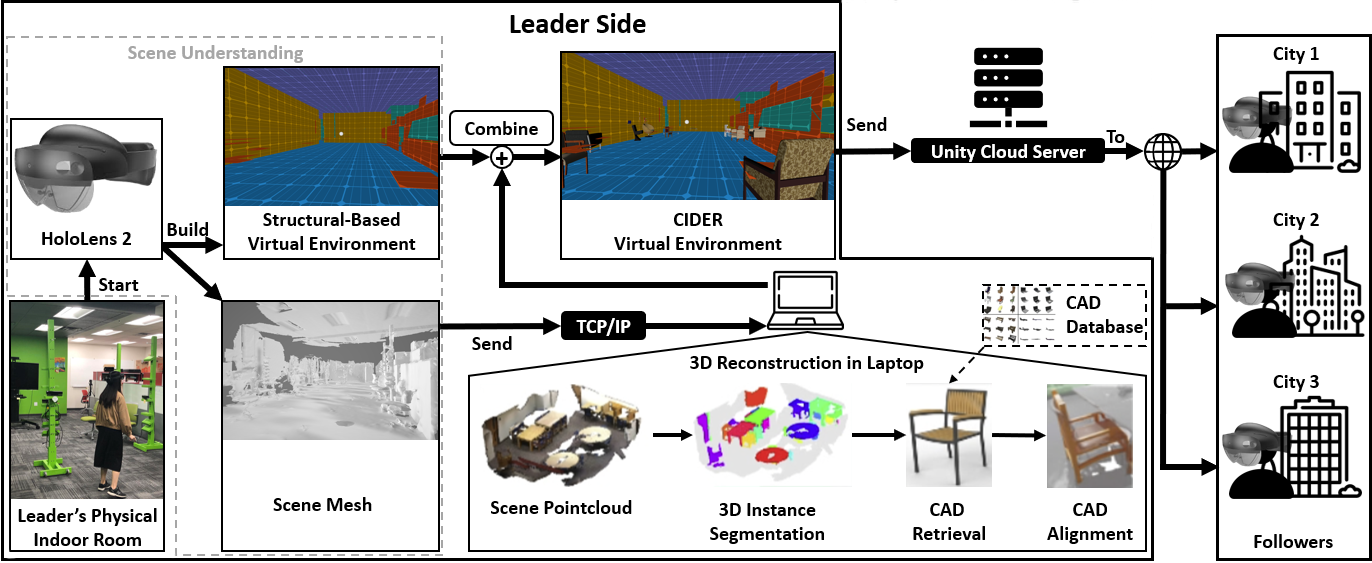

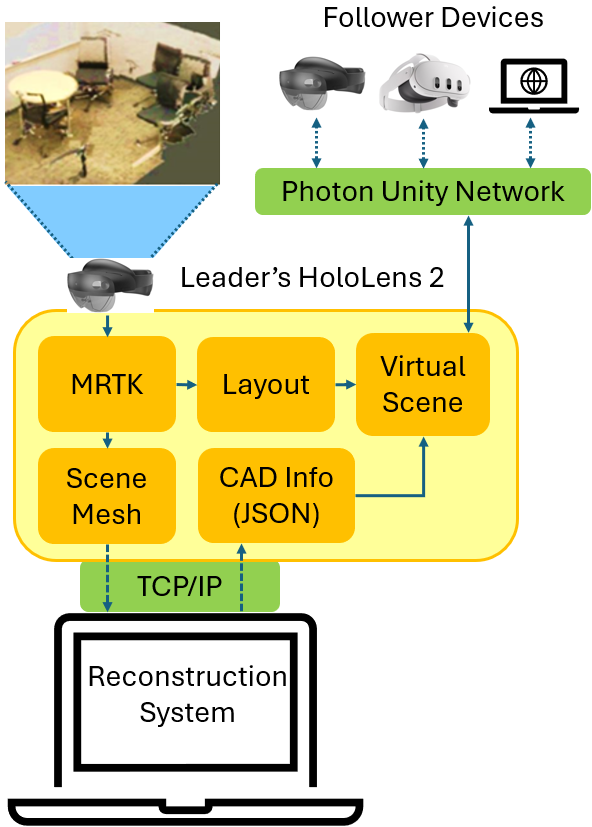

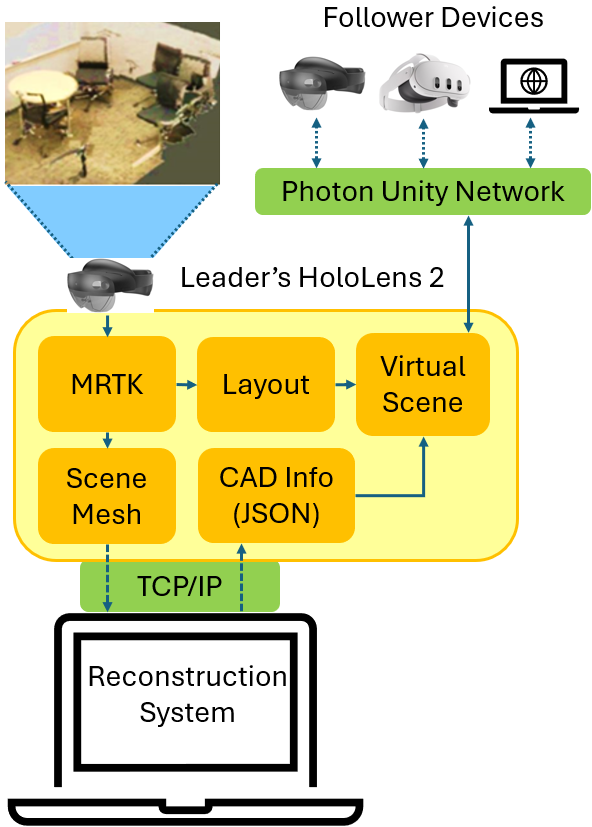

This paper introduces Room2XR, a framework designed to dynamically reconstruct real-world scenes into virtual semantically similar representations using CAD models, and share them for remote collaboration. Room2XR employs advanced scene understanding technologies to create detailed virtual environments, enabling multiple users to interact with and manipulate these spaces in real time. By leveraging efficient algorithms and data transmission methods, Room2XR ensures accessibility and usability even on low-bandwidth networks.

Hung-Jui Guo and Balakrishnan Prabhakaran

Published at: IEEE International Conference on Multimedia Information Processing and Retrieval (MIPR) 2024

In this paper, we first propose an improved formula with normalization as a motion parallax evaluation standard and evaluate it by conducting motion parallax experiments using a Microsoft HoloLens 2 device. The experiments consisted of a standard one with different relative moving distances and distance reporting methods (blind walking and oral reporting), and another using an X-ray vision system that helps users see through physical obstacles, potentially providing motion parallax. The aim was to assess the effectiveness of our proposed standard and evaluate whether the proposed conditions influence users’ ability to estimate distance by utilizing motion parallax.

Balakrishnan Prabhakaran and Hung-Jui Guo

Published at: HCI International Conference 2024

Current citation number: 25

In this paper, we systematically evaluate the features that utilized sensors, including RGB cameras, eye cameras, depth cameras, and microphone array built in the Mixed Reality (MR) head-mounted device (HMD) – HoloLens 2 to show the progress compared to the previous HoloLens generation HMD. Moreover, we process and evaluate the accuracy of the data collected from the HoloLens 2 that incorporate Azure Spatial Anchor and research mode Inertial Measurement Unit (IMU) to show the innovations of this device. Based on the evaluation results, we provide discussion and suggestions for future researchers to design applications with existing capabilities.

Omeed Eshaghi Ashtiani, Hung-Jui Guo and Balakrishnan Prabhakaran

Published at: Frontiers in Virtual Reality

We aim to perform an analysis of the effects of motion cues, color, and luminance on depth estimation of AR objects overlaying the real world with OST displays. We perform two experiments, differing in environmental lighting conditions (287 lux and 156 lux), and analyze the effects and differences on depth and speed perceptions.

Hung-Jui Guo, Jonathan Z. Bakdash, Laura R. Marusich and Balakrishnan Prabhakaran

Published at: IEEE Conference on Virtual Reality and 3D User Interfaces 2023.

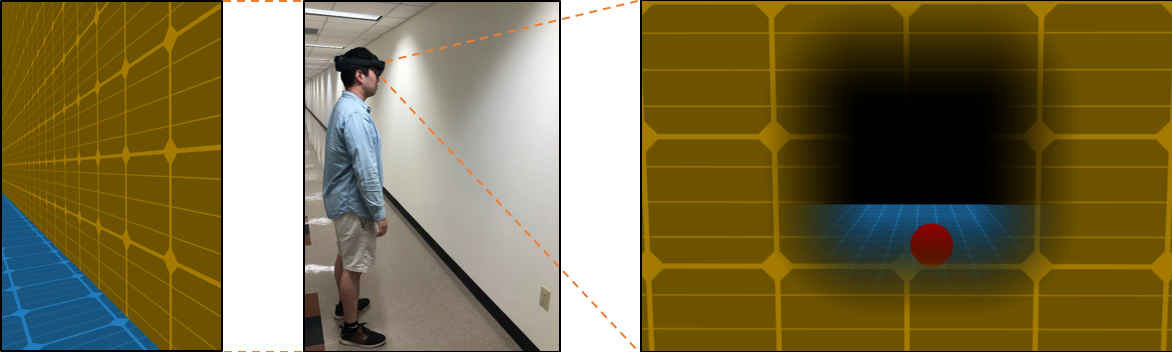

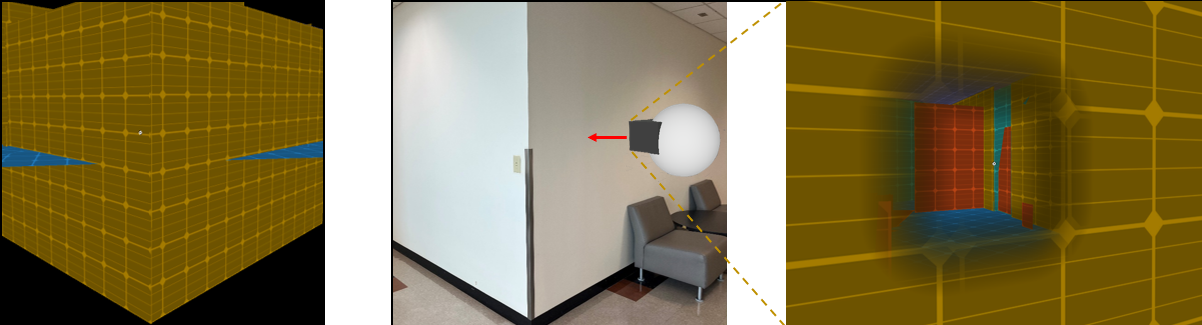

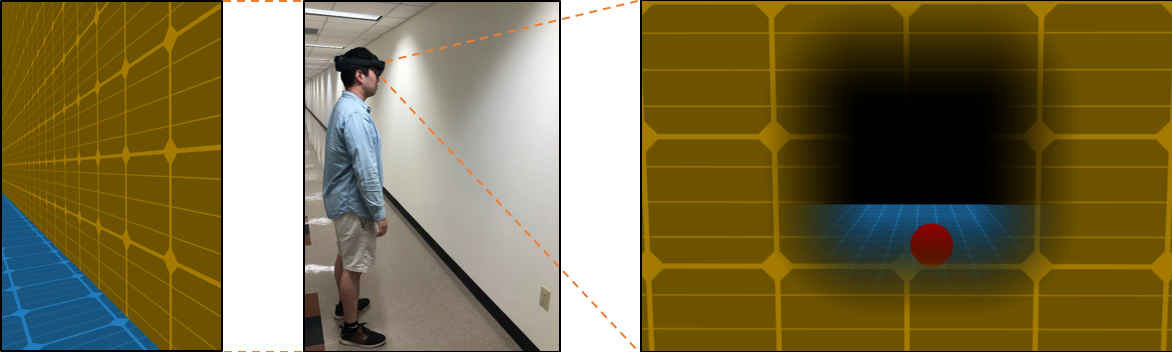

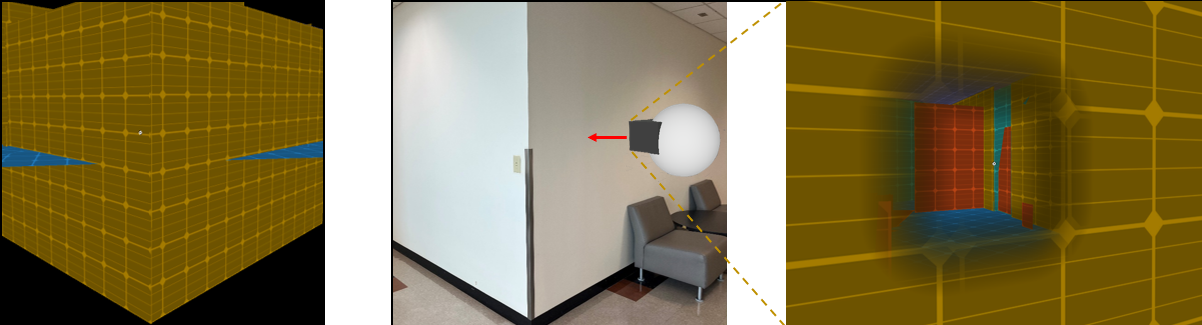

In this paper, we utilized the MR Head-Mounted Device (HMD) -- HoloLens 2 to build a virtual environment and a dynamic X-Ray vision window based on the created environment. Unlike the previous works, our system allows users to physically move and still have the X-Ray vision ability in real-time, providing depth cues and additional information in the surrounding environment. On top of it, we designed an experiment and recruited multiple participants as proof of concept for our system's ability.

Hung-Jui Guo, Jonathan Z. Bakdash, Laura R. Marusich and Balakrishnan Prabhakaran

Published at: Virtual Reality Software and Technology (VRST) 2022.

Current citation number: 4

In this paper, we demonstrate a dynamic X-ray vision window that is rendered in real-time based on the user's current position and changes with movement in the physical environment. Moreover, the location and transparency of the window are also dynamically rendered based on the user's eye gaze. We build this X-ray vision window for a current state-of-the-art MR Head-Mounted Device (HMD) -- HoloLens 2 by integrating several different features: scene understanding, eye tracking, and clipping primitive.

Hung-Jui Guo, Jonathan Z. Bakdash, Laura R. Marusich and Balakrishnan Prabhakaran

Published at: IEEE Transactions on Instrumentation and Measurement (TIM), Impact Factor: 5.332.

Current citation number: 16

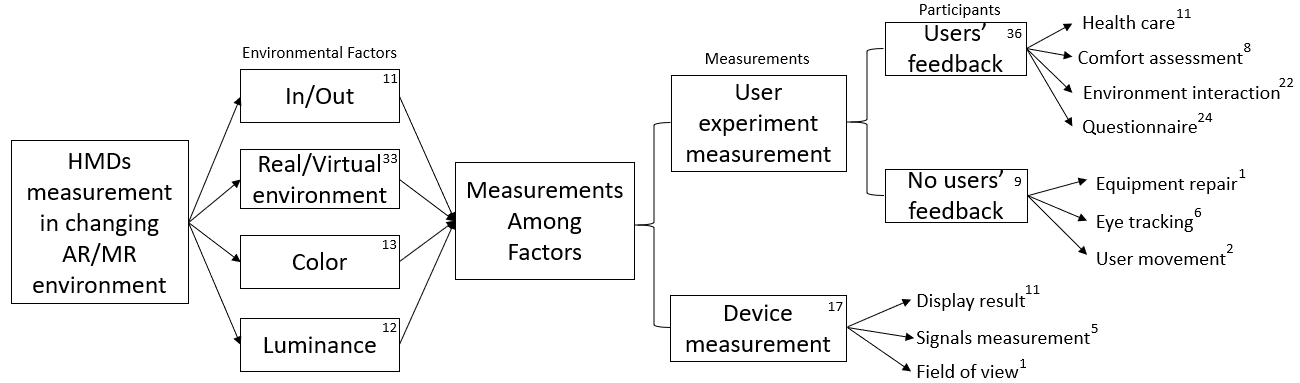

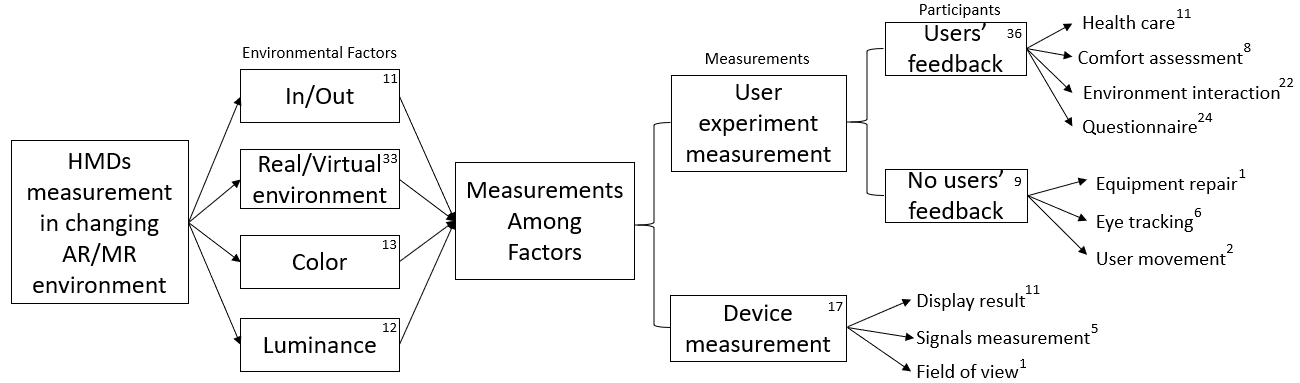

In this paper, we survey 87 environmental-related HMD papers with measurements from users, spanning over 32 years. We provide a thorough review of AR- and MR-related user experiments with HMDs under different environmental factors. Then, we summarize trends in this literature over time using a new classification method with four environmental factors, the presence or absence of user feedback in behavioral experiments, and ten main categories to subdivide these papers (e.g., domain and method of user assessment). We also categorize characteristics of the behavioral experiments, showing similarities and differences among papers.

Yu-Yen Chung, Hung-Jui Guo, Hiranya Garbha Kumar and Balakrishnan Prabhakaran

Published at: 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR). (2020)

Current citation number: 7

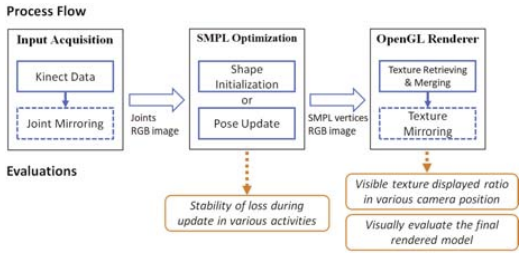

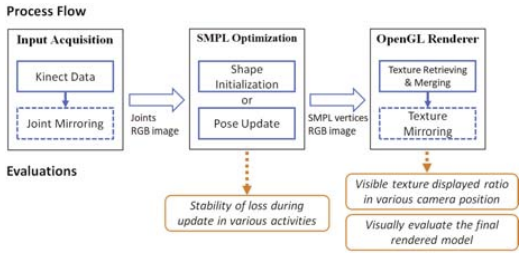

We created a Mixed Reality game using several RGB-D cameras to capture users' motion and texture onto the 3D human avatar in virtual environments. This game aims to exercise and relieve the pain of Phantom Pain. (Please read the abstract to find further information)

My main contribution to this paper is to transfer the texture from the frame to the image and mirror the texture to the missing limb.

Wei-Ta Chu and Hung-Jui Guo

Published at: Workshop on Multimodal Understanding of Social, Affective and Subjective Attributes. (2017)

Current citation number: 76

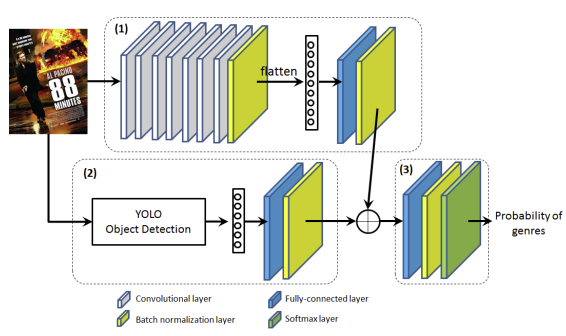

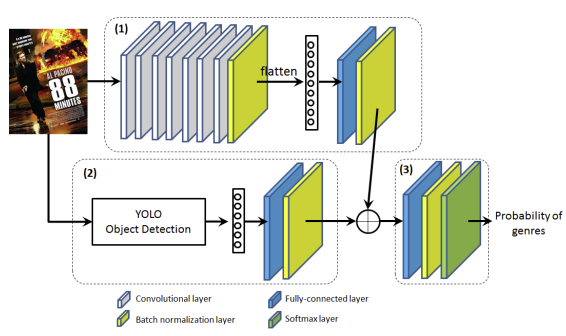

We used movie posters as information to train the neural network model to help classify multiple movie genres. This work is a multi-label task that sets the movie classification foundation by using movie posters.

My main contribution to this work is collecting a large-scale movie poster dataset and building a custom neural network to classify movie genres by using the dataset.

This paper was my main research topic during my Master's degree.

More info on Google scholar